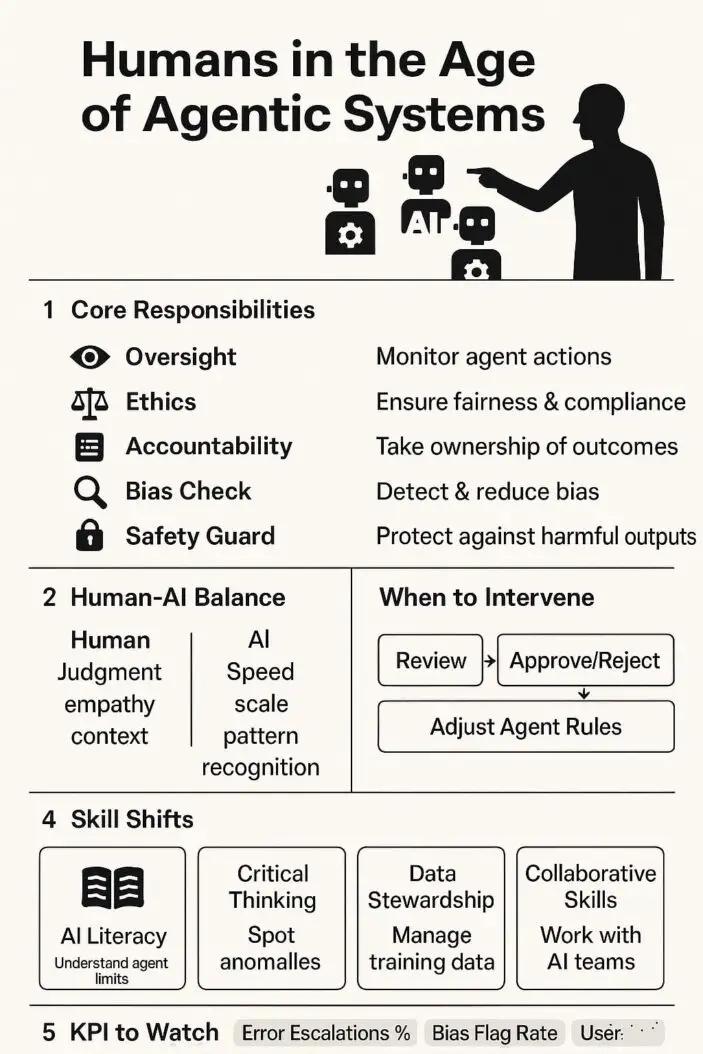

The rise of AI agents in workplaces brings a critical question: what are our human responsibilities when machines start making decisions? The answer is clear: humans must maintain oversight, ensure ethical use, and preserve human judgment in critical areas while adapting to collaborative relationships with intelligent systems.

As agentic AI systems become more autonomous, human responsibility actually increases, not decreases. We become the guardians of ethical decision-making, the architects of AI behavior, and the final arbiters of complex judgments that require human values and context.

Understanding Agentic AI Systems in the Workplace

Agentic AI systems are autonomous software programs that can perceive their environment, make decisions, and take actions without constant human direction. Unlike simple automation tools, these systems can adapt, learn, and modify their behavior based on changing circumstances.

Common workplace examples include:

- AI assistants that manage schedules and prioritize tasks

- Automated customer service systems that resolve complex inquiries

- AI-powered hiring tools that screen candidates

- Financial systems that make trading or lending decisions

- Supply chain management AI that adjusts inventory and logistics

These systems differ from traditional software because they operate with degrees of independence. They don’t just follow pre-programmed rules—they make contextual decisions based on data patterns and learned behaviors.

Core Human Responsibilities in AI-Integrated Workplaces

1. Maintaining Strategic Oversight and Governance

Humans must establish clear boundaries for AI operations. This means defining what decisions AI can make independently and which require human approval.

Key oversight responsibilities:

- Set decision thresholds that trigger human review

- Monitor AI performance metrics regularly

- Establish escalation protocols for edge cases

- Maintain audit trails of AI decisions and outcomes

- Create feedback loops between AI actions and human evaluation

Example: A marketing AI might automatically adjust ad spending up to $10,000, but any changes above that threshold require human approval. Weekly performance reviews ensure the AI’s decisions align with business goals.

2. Ensuring Ethical AI Behavior and Fairness

Humans bear full responsibility for the ethical implications of AI decisions. This includes preventing bias, ensuring fairness, and maintaining transparency in automated processes.

Ethical responsibilities include:

- Regular bias testing across different demographic groups

- Implementing explainable AI systems where decisions can be understood

- Creating diverse teams to review AI behavior patterns

- Establishing clear ethical guidelines for AI decision-making

- Protecting sensitive data and maintaining privacy standards

A study by MIT researchers found that AI hiring tools showed significant bias against women and minorities when not properly monitored. This demonstrates why human oversight isn’t optional—it’s essential.

3. Preserving Human Judgment in Critical Decisions

Certain decisions require uniquely human capabilities: empathy, moral reasoning, creative problem-solving, and understanding of complex social contexts.

Areas requiring human judgment:

- Employee disciplinary actions and terminations

- Strategic business pivots during crises

- Creative campaign development and brand positioning

- Customer complaints involving emotional distress

- Decisions affecting employee safety and well-being

4. Continuous Learning and Skill Development

As AI handles routine tasks, humans must develop complementary skills that add unique value alongside AI capabilities.

Essential skill areas:

- AI literacy and understanding system capabilities

- Data interpretation and pattern recognition

- Emotional intelligence and relationship management

- Complex problem-solving and creative thinking

- Ethical reasoning and decision-making frameworks

The Collaboration Framework: Humans and AI Working Together

Defining Roles and Boundaries

| Decision Type | AI Role | Human Role |

|---|---|---|

| Routine Operations | Fully autonomous within parameters | Set parameters, monitor performance |

| Tactical Decisions | Provide recommendations with confidence scores | Review recommendations, make final decisions |

| Strategic Decisions | Analyze data, model scenarios | Interpret results, consider broader implications |

| Ethical Dilemmas | Flag potential issues | Apply moral reasoning, make ethical judgments |

| Crisis Response | Gather information, suggest initial actions | Lead response, make critical decisions |

Building Effective Human-AI Teams

Successful collaboration requires clear communication protocols between humans and AI systems. This means:

- Transparent reporting: AI systems should clearly communicate their confidence levels, data sources, and reasoning processes

- Regular calibration: Human feedback helps AI systems improve their decision-making over time

- Complementary strengths: Humans handle creativity and empathy while AI manages data processing and pattern recognition

Industry-Specific Responsibilities

Healthcare Settings

In medical environments, human responsibility intensifies due to life-and-death implications. Healthcare professionals must:

- Verify AI diagnoses with clinical expertise

- Maintain patient relationships and bedside manner

- Handle complex medical ethics decisions

- Ensure AI recommendations consider individual patient contexts

Financial Services

Financial professionals working with AI trading and lending systems must:

- Monitor for market manipulation or unfair lending practices

- Ensure compliance with regulatory requirements

- Maintain fiduciary responsibility to clients

- Handle complex financial planning that requires human insight

Human Resources

HR professionals using AI for hiring and employee management must:

- Prevent discriminatory practices in AI-driven decisions

- Maintain human connection in employee relations

- Handle sensitive personnel issues requiring empathy

- Ensure AI decisions align with company culture and values

Implementation Strategy: Building Responsible AI Governance

Phase 1: Assessment and Planning (Months 1-2)

Inventory existing AI systems:

- Document all AI tools and their decision-making capabilities

- Identify potential risk areas and ethical concerns

- Assess current human oversight mechanisms

- Map stakeholder roles and responsibilities

Establish governance framework:

- Create AI ethics committee with diverse representation

- Develop clear policies for AI use and oversight

- Set up regular review and audit processes

- Define escalation procedures for problematic AI behavior

Phase 2: Training and Skill Development (Months 2-4)

Comprehensive team training:

- AI literacy programs for all employees

- Specialized training for AI system managers

- Ethics training focused on AI decision-making

- Technical skills development for system monitoring

Communication protocols:

- Clear channels for reporting AI concerns

- Regular team meetings to discuss AI performance

- Documentation standards for AI decisions

- Feedback mechanisms for continuous improvement

Phase 3: Monitoring and Optimization (Ongoing)

Performance tracking:

- Key metrics for AI decision quality

- Regular bias testing and fairness audits

- Employee satisfaction with AI collaboration

- Customer impact assessments

Continuous improvement:

- Regular system updates based on human feedback

- Adaptation of oversight procedures as AI capabilities evolve

- Integration of new ethical guidelines and regulations

- Expansion of successful practices across the organization

Managing the Transition: Common Challenges and Solutions

Challenge 1: Employee Resistance and Fear

Many workers fear AI will replace them entirely. Address this through:

- Clear communication about AI’s complementary role

- Retraining programs that highlight human-AI collaboration

- Success stories showcasing enhanced productivity

- Career development paths that incorporate AI skills

Challenge 2: Over-reliance on AI Systems

Some teams may become too dependent on AI, losing critical thinking skills:

- Implement regular “human-only” decision exercises

- Require human validation of AI recommendations

- Create scenarios where employees must work without AI assistance

- Maintain backup procedures for AI system failures

Challenge 3: Accountability Confusion

When AI makes a wrong decision, responsibility can become unclear:

- Establish clear accountability chains before implementation

- Document decision-making processes thoroughly

- Create incident response procedures for AI errors

- Define legal and ethical responsibility at each level

The Future of Human Responsibility in AI Workplaces

As AI systems become more sophisticated, human responsibilities will evolve rather than diminish. Future challenges include:

Emerging areas of responsibility:

- Managing AI systems that can modify their own code

- Handling AI creativity and intellectual property questions

- Navigating AI systems that develop unexpected behaviors

- Maintaining human agency in increasingly automated environments

Preparing for advanced AI:

- Developing robust testing frameworks for complex AI behavior

- Creating adaptable governance structures

- Building diverse teams with complementary AI expertise

- Establishing industry standards for human-AI collaboration

Organizations that proactively address these evolving responsibilities will build more resilient, ethical, and effective workplaces.

Measuring Success: Key Performance Indicators

Track these metrics to ensure responsible AI implementation:

Operational Metrics:

- Decision accuracy rates for human-AI collaborative processes

- Time to resolution for AI-flagged issues requiring human intervention

- Employee satisfaction scores with AI collaboration tools

- Customer satisfaction with AI-enhanced services

Ethical and Governance Metrics:

- Bias testing results across demographic groups

- Compliance audit scores for AI decision-making

- Number of ethical concerns raised and resolved

- Transparency scores for AI decision explanations

Strategic Metrics:

- Business outcomes improved through human-AI collaboration

- Employee skill development progress in AI-related areas

- Innovation rates in departments using collaborative AI

- Risk reduction in areas with proper AI oversight

Conclusion

Human responsibility in AI-integrated workplaces centers on maintaining ethical oversight, preserving critical human judgment, and fostering effective collaboration with intelligent systems. Rather than being replaced by AI, humans must evolve into AI stewards—professionals who guide, monitor, and enhance artificial intelligence to serve human values and organizational goals.

The key is balance: leveraging AI’s capabilities for efficiency and insights while maintaining human control over critical decisions and ethical standards. Organizations that successfully navigate this balance will create workplaces where human creativity and AI capability combine to achieve results neither could accomplish alone.

Success requires proactive planning, comprehensive training, and ongoing commitment to ethical AI practices. By embracing these responsibilities now, we can shape a future where agentic AI systems enhance human potential rather than diminish human agency.

Frequently Asked Questions

Who is ultimately responsible when an AI system makes a harmful decision?

The organization and the humans who deployed, configured, and oversaw the AI system bear primary responsibility. This includes the executives who approved its use, the technical teams who implemented it, and the managers who monitored its performance. Legal responsibility may vary by jurisdiction, but ethical responsibility clearly rests with the human decision-makers who chose to delegate authority to the AI system.

How can small businesses manage AI responsibility without dedicated AI teams?

Small businesses should start with clearly defined use cases and simple oversight procedures. Focus on AI tools with strong explainability features, establish basic monitoring routines, and consider partnering with AI vendors who provide governance support. Begin with low-risk applications and gradually expand as you build expertise and confidence in your oversight capabilities.

What happens if an employee refuses to work with AI systems?

Organizations should provide training and support to help employees adapt, but may need to consider role adjustments if someone cannot effectively collaborate with essential AI tools. The key is distinguishing between reasonable concerns that require address versus resistance to necessary technological evolution. Clear communication about AI’s complementary role often resolves initial resistance.

How often should we audit our AI systems for bias and ethical issues?

High-impact AI systems affecting hiring, lending, or customer treatment should be audited quarterly or whenever significant changes occur. Lower-impact systems may be reviewed annually. However, continuous monitoring through automated fairness metrics and regular spot-checks should be ongoing. The frequency depends on the system’s potential impact and the rate of change in your data or business environment.

Can AI systems be held legally accountable for their decisions?

Currently, AI systems cannot be held legally accountable—they are tools without legal personhood. Accountability flows to the humans and organizations that deploy and manage them. However, this legal landscape is evolving, with some jurisdictions developing specific AI liability frameworks. Regardless of legal developments, ethical responsibility for AI behavior remains with human stakeholders.

- What is One Challenge in Ensuring Fairness in Generative AI: The Hidden Bias Problem - August 15, 2025

- How Small Language Models Are the Future of Agentic AI - August 15, 2025

- What Are the Four Core Characteristics of an AI Agent? - August 15, 2025