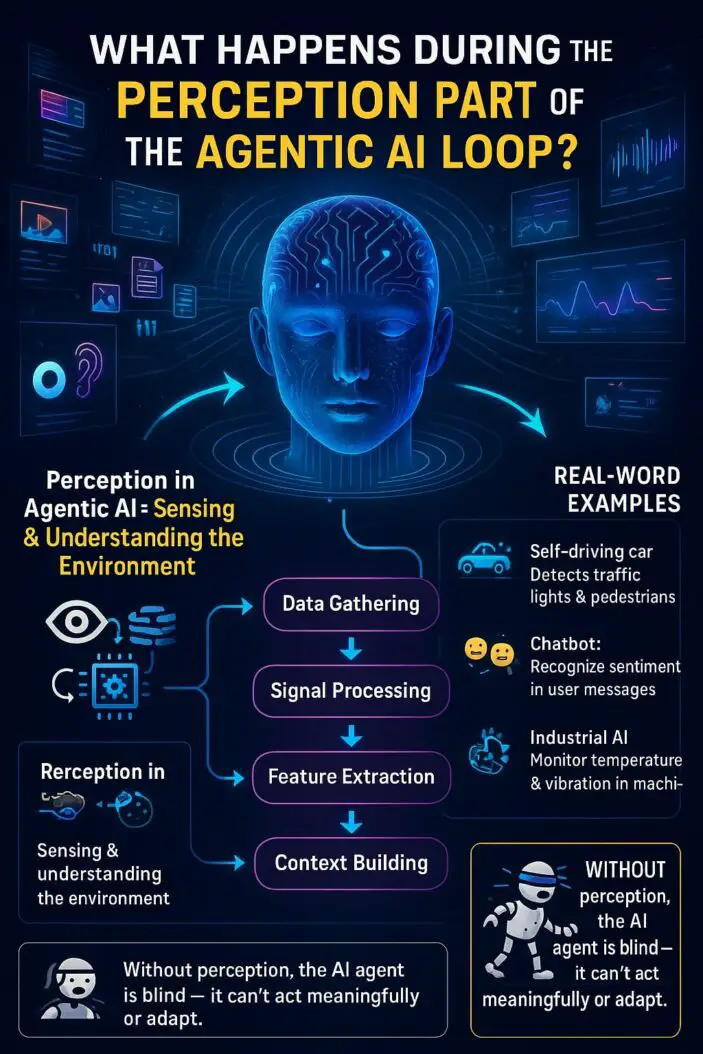

The perception phase is where agentic AI systems gather, process, and interpret information from their environment. This critical first step determines how well an AI agent can understand its surroundings and make informed decisions.

During perception, AI agents collect raw data through various sensors and inputs, then transform this information into meaningful representations they can use for reasoning and action. Without effective perception, even the most sophisticated AI agent becomes blind to its environment and cannot function properly.

Understanding the Agentic AI Loop

Before diving into perception specifics, you need to understand the complete agentic AI loop. This loop consists of four main phases:

- Perception: Gathering and interpreting environmental data

- Planning: Deciding what actions to take based on perceived information

- Action: Executing the planned actions in the environment

- Learning: Updating knowledge and improving future performance

The perception phase feeds directly into planning, making it the foundation of intelligent behavior in AI systems.

What Is Perception in Agentic AI Systems?

Perception in agentic AI refers to the process of acquiring, processing, and interpreting sensory information about the environment. This phase converts raw environmental data into structured, meaningful information the AI agent can understand and use.

Think of perception as the AI agent’s sensory system. Just as humans use their eyes, ears, and other senses to understand the world, AI agents use various input mechanisms to gather information about their operational environment.

Key Components of AI Perception

The perception system typically includes:

Data Collection: Gathering raw information from various sources Preprocessing: Cleaning and organizing the collected data Feature Extraction: Identifying relevant patterns and characteristics Interpretation: Converting processed data into meaningful representations Context Integration: Combining new information with existing knowledge

The Step-by-Step Perception Process

Step 1: Environmental Sensing

The perception process begins with environmental sensing. AI agents collect data through multiple channels:

Visual Inputs: Cameras capture images and video streams Audio Inputs: Microphones record sound and speech Text Inputs: Natural language processing systems read documents and messages Sensor Data: IoT devices provide temperature, pressure, motion, and other measurements Digital Inputs: APIs deliver structured data from databases and web services

Step 2: Data Preprocessing

Raw sensory data often contains noise, errors, or irrelevant information. The preprocessing stage addresses these issues:

Noise Reduction: Filtering out irrelevant background information Data Cleaning: Removing corrupted or incomplete data points Normalization: Standardizing data formats and scales Synchronization: Aligning data from multiple sources by time or sequence

Step 3: Feature Extraction

Feature extraction identifies the most important characteristics within the preprocessed data:

Pattern Recognition: Finding recurring structures or arrangements Object Detection: Identifying distinct entities within visual or sensor data Semantic Analysis: Understanding meaning in text or speech Anomaly Detection: Spotting unusual patterns that might indicate problems

Step 4: Data Integration and Fusion

Multiple data sources must be combined to create a comprehensive understanding:

Sensor Fusion: Combining information from different types of sensors Temporal Integration: Connecting current observations with historical data Spatial Integration: Understanding relationships between different locations Contextual Mapping: Relating new information to existing knowledge structures

Step 5: Representation Formation

The final step creates internal representations the AI agent can use for reasoning:

Symbolic Representations: Creating logical statements and rules Vector Representations: Encoding information in numerical formats Graph Representations: Modeling relationships between entities Probabilistic Representations: Capturing uncertainty and confidence levels

Types of Perception in Different AI Systems

Computer Vision Systems

Computer vision agents focus on visual perception:

Object Recognition: Identifying and classifying objects in images Scene Understanding: Comprehending spatial relationships and context Motion Detection: Tracking movement and changes over time Depth Perception: Understanding three-dimensional spatial information

Natural Language Processing Systems

NLP agents specialize in linguistic perception:

Text Understanding: Parsing grammar and extracting meaning Sentiment Analysis: Detecting emotional tone and attitudes Intent Recognition: Understanding what users want to accomplish Context Awareness: Maintaining conversation history and relevance

Robotics Systems

Robotic agents require multimodal perception:

Spatial Awareness: Understanding position and orientation Obstacle Detection: Identifying barriers and navigation challenges Manipulation Planning: Perceiving objects for grasping and moving Human Interaction: Recognizing and responding to human presence

Autonomous Vehicle Systems

Self-driving cars need comprehensive environmental perception:

Traffic Pattern Recognition: Understanding road conditions and traffic flow Pedestrian Detection: Identifying and predicting human movement Sign and Signal Recognition: Reading traffic control devices Weather Adaptation: Adjusting perception based on environmental conditions

Common Perception Challenges and Solutions

Challenge 1: Sensor Limitations

Problem: Individual sensors have inherent limitations and blind spots.

Solution: Implement sensor fusion techniques that combine multiple data sources. Use redundant sensors to verify information and fill gaps in coverage.

Challenge 2: Environmental Variability

Problem: Real-world conditions change constantly, affecting sensor performance.

Solution: Develop adaptive perception systems that adjust to different lighting, weather, and environmental conditions. Use robust preprocessing techniques that handle variability.

Challenge 3: Data Quality Issues

Problem: Noisy, incomplete, or corrupted data can mislead the perception system.

Solution: Implement comprehensive data validation and cleaning processes. Use confidence scoring to weight information reliability.

Challenge 4: Real-Time Processing Requirements

Problem: Many agentic AI systems need immediate perception and response.

Solution: Optimize algorithms for speed and use parallel processing. Implement priority systems that focus on the most critical information first.

Challenge 5: Context Understanding

Problem: Raw sensor data lacks context about meaning and relevance.

Solution: Build knowledge bases and context models that help interpret sensory information. Use machine learning to improve context understanding over time.

Perception Technologies and Techniques

Deep Learning Approaches

Convolutional Neural Networks (CNNs): Excellent for visual pattern recognition Recurrent Neural Networks (RNNs): Effective for sequential data processing Transformer Models: Superior for complex language understanding Generative Adversarial Networks (GANs): Useful for data augmentation and synthesis

Traditional Machine Learning Methods

Support Vector Machines: Reliable for classification tasks Random Forests: Robust for handling mixed data types Clustering Algorithms: Effective for discovering patterns Bayesian Networks: Good for handling uncertainty

Signal Processing Techniques

Fourier Transforms: Analyzing frequency components in signals Wavelet Analysis: Time-frequency analysis of complex signals Kalman Filtering: Estimating states from noisy observations Edge Detection: Finding boundaries and transitions in images

Measuring Perception Performance

Accuracy Metrics

Track how often the perception system correctly interprets environmental information:

| Metric | Description | Use Case |

|---|---|---|

| Precision | Percentage of correct positive identifications | Object detection |

| Recall | Percentage of actual positives correctly identified | Safety-critical systems |

| F1 Score | Harmonic mean of precision and recall | Balanced performance assessment |

| Mean Average Precision | Average precision across multiple classes | Multi-class recognition |

Speed Metrics

Monitor processing time to ensure real-time performance:

Latency: Time from data input to interpretation output Throughput: Amount of data processed per unit time Response Time: Total time for perception-to-action cycle Frame Rate: Processing speed for continuous data streams

Reliability Metrics

Assess consistency and dependability of perception systems:

Confidence Scores: System’s certainty about its interpretations Error Rates: Frequency of incorrect perceptions Robustness: Performance under varying conditions Stability: Consistency over time and repeated trials

Best Practices for Implementing Perception Systems

Design Considerations

Choose Appropriate Sensors: Select sensors that match your specific perception needs and environmental constraints.

Plan for Redundancy: Include backup sensors and alternative perception methods for critical applications.

Consider Computational Resources: Balance perception accuracy with available processing power and energy constraints.

Design for Scalability: Build systems that can handle increasing amounts of data and complexity.

Development Guidelines

Start Simple: Begin with basic perception tasks and gradually add complexity.

Use Quality Data: Invest in high-quality training and testing datasets that represent real-world conditions.

Implement Continuous Learning: Allow perception systems to improve through experience and feedback.

Test Thoroughly: Validate perception performance under diverse conditions and edge cases.

Integration Strategies

Standardize Interfaces: Create consistent APIs between perception and other system components.

Handle Uncertainty: Design systems that can operate effectively even with imperfect perception.

Monitor Performance: Implement continuous monitoring to detect perception degradation or failures.

Plan for Updates: Build systems that can incorporate improved perception models and techniques.

Real-World Examples of Perception in Action

Autonomous Driving

Tesla‘s Full Self-Driving system demonstrates sophisticated perception capabilities:

The system processes inputs from eight cameras, twelve ultrasonic sensors, and radar to create a 360-degree view of the environment. It identifies lanes, other vehicles, pedestrians, traffic signs, and road conditions in real-time.

The perception system must handle complex scenarios like construction zones, emergency vehicles, and unusual weather conditions. It combines visual processing with mapping data to understand context and make driving decisions.

Smart Home Systems

Amazon Alexa and Google Home showcase advanced audio perception:

These systems continuously listen for wake words while filtering out background noise. They use beam-forming technology to focus on the speaker’s voice and natural language processing to understand commands.

The perception system handles multiple accents, background conversations, and varying audio quality. It combines speech recognition with context understanding to interpret user intentions accurately.

Industrial Robotics

Manufacturing robots demonstrate precise visual and spatial perception:

Robotic assembly systems use computer vision to identify parts, check quality, and guide precise movements. They adapt to variations in part positioning and lighting conditions.

The perception system must achieve high accuracy and reliability in industrial environments with dust, vibration, and changing conditions. It provides feedback for quality control and process optimization.

Medical Diagnosis AI

Medical AI systems showcase specialized perception capabilities:

Radiology AI analyzes medical images to detect tumors, fractures, and other abnormalities. These systems process X-rays, MRIs, and CT scans with superhuman accuracy in many cases.

The perception system must distinguish between normal variations and pathological conditions. It provides confidence scores and highlights areas of concern for human medical professionals.

The Future of Perception in Agentic AI

Emerging Technologies

Neuromorphic Sensors: Brain-inspired sensors that process information more efficiently Quantum Sensing: Ultra-sensitive detection capabilities for specialized applications Multimodal Transformers: Models that seamlessly combine different types of sensory input Edge AI Processors: Specialized hardware for real-time perception processing

Integration Trends

Federated Learning: Improving perception through distributed learning across multiple agents Transfer Learning: Adapting perception models quickly to new domains and tasks Few-Shot Learning: Training perception systems with minimal examples Continual Learning: Enabling perception systems to learn continuously without forgetting

Application Expansions

Environmental Monitoring: AI agents that perceive and respond to climate and ecosystem changes Space Exploration: Perception systems for autonomous spacecraft and planetary rovers Underwater Systems: Specialized perception for marine and underwater environments Medical Robotics: Precise perception for surgical and therapeutic applications

Summary

The perception phase of the agentic AI loop serves as the foundation for intelligent behavior. During this critical stage, AI agents collect environmental data through various sensors, process and clean this information, extract meaningful features, integrate multiple data sources, and create internal representations for decision-making.

Effective perception requires careful attention to sensor selection, data quality, processing algorithms, and real-time performance. The best perception systems combine multiple technologies and techniques while addressing common challenges like environmental variability, sensor limitations, and context understanding.

Success in implementing perception systems depends on following best practices for design, development, and integration. This includes starting with simple approaches, using quality data, implementing continuous learning, and planning for scalability and updates.

As AI technology continues advancing, perception capabilities will become more sophisticated, efficient, and applicable to new domains. The future promises neuromorphic sensors, quantum detection, and seamless multimodal integration that will enable AI agents to perceive and understand their environments with unprecedented accuracy and speed.

Understanding perception in agentic AI systems is essential for anyone working with autonomous systems, robotics, or intelligent software agents. By mastering these concepts, you can build more effective AI systems that truly understand and respond appropriately to their environments.

Frequently Asked Questions

How does perception differ from simple data collection in AI systems?

Perception goes beyond data collection by including interpretation and meaning extraction. While data collection simply gathers raw information, perception processes this data to understand what it means in context. Perception systems identify patterns, objects, and relationships that enable intelligent decision-making.

What happens if the perception phase fails or provides incorrect information?

Perception failures can cascade through the entire agentic AI loop, leading to poor planning and inappropriate actions. Most robust systems include error detection, confidence scoring, and fallback mechanisms. They may request additional information, use alternative sensors, or default to safe behaviors when perception uncertainty is high.

Can agentic AI systems improve their perception capabilities over time?

Yes, modern agentic AI systems often include learning mechanisms that improve perception through experience. They can adapt to new environmental conditions, learn to recognize new objects or patterns, and refine their interpretation accuracy based on feedback from their actions and outcomes.

How do multimodal perception systems handle conflicting information from different sensors?

Multimodal systems use sensor fusion techniques that weight information based on reliability, context, and past performance. They may use voting mechanisms, confidence-weighted averaging, or probabilistic models to resolve conflicts. Some systems also identify and report when sensors provide contradictory information.

What are the main bottlenecks in real-time perception processing?

The primary bottlenecks include computational complexity of processing algorithms, data transfer speeds between sensors and processors, memory limitations for storing and processing large datasets, and the need to balance accuracy with processing speed. Optimization often focuses on efficient algorithms, parallel processing, and specialized hardware acceleration.

- What is One Challenge in Ensuring Fairness in Generative AI: The Hidden Bias Problem - August 15, 2025

- How Small Language Models Are the Future of Agentic AI - August 15, 2025

- What Are the Four Core Characteristics of an AI Agent? - August 15, 2025