Building an AI agent with ChatGPT is simpler than most people think. You can create intelligent automation that handles tasks, answers questions, and interacts with users naturally. This guide shows you exactly how to do it, step by step.

An AI agent is a program that uses artificial intelligence to perform tasks automatically. Unlike basic chatbots, these agents can reason, make decisions, and take actions based on what they learn from conversations.

What You’ll Learn

This guide covers everything you need to build a working AI agent:

- Setting up your development environment

- Connecting to ChatGPT’s API

- Creating agent logic and memory

- Adding tools and capabilities

- Testing and deployment

- Real-world examples and use cases

AI Agents vs Regular Chatbots

Regular Chatbots

- Follow predefined scripts

- Give the same responses to similar questions

- Cannot learn or adapt

- Limited to basic keyword matching

AI Agents

- Use natural language processing

- Learn from each conversation

- Can use external tools and APIs

- Make decisions based on context

- Handle complex, multi-step tasks

Think of chatbots as vending machines – you press a button, you get a specific item. AI agents are more like personal assistants who understand your needs and figure out how to help you.

Prerequisites and Requirements

Technical Skills Needed

- Basic programming knowledge (Python recommended)

- Understanding of APIs and HTTP requests

- Familiarity with JSON data format

Tools and Software

- Python 3.7 or higher

- Text editor or IDE (VS Code, PyCharm)

- OpenAI API account

- Git for version control

Estimated Costs

- OpenAI API usage: $0.002 per 1,000 tokens (very affordable for testing)

- Hosting: $5-20/month for basic cloud hosting

- Development tools: Free options available

Setting Up Your Development Environment

Step 1: Install Python and Required Libraries

First, make sure Python is installed on your system. Then install the essential libraries:

pip install openai python-dotenv requests flask

Step 2: Create Your Project Structure

Set up a clean project folder:

ai-agent-project/

├── main.py

├── config.py

├── agent.py

├── tools/

├── data/

└── .env

Step 3: Get Your OpenAI API Key

- Visit OpenAI’s platform

- Create an account or log in

- Navigate to API Keys section

- Generate a new secret key

- Save it securely – you’ll need it soon

Step 4: Set Up Environment Variables

Create a .env file in your project root:

OPENAI_API_KEY=your_api_key_here

MODEL_NAME=gpt-5

MAX_TOKENS=1000

TEMPERATURE=0.7

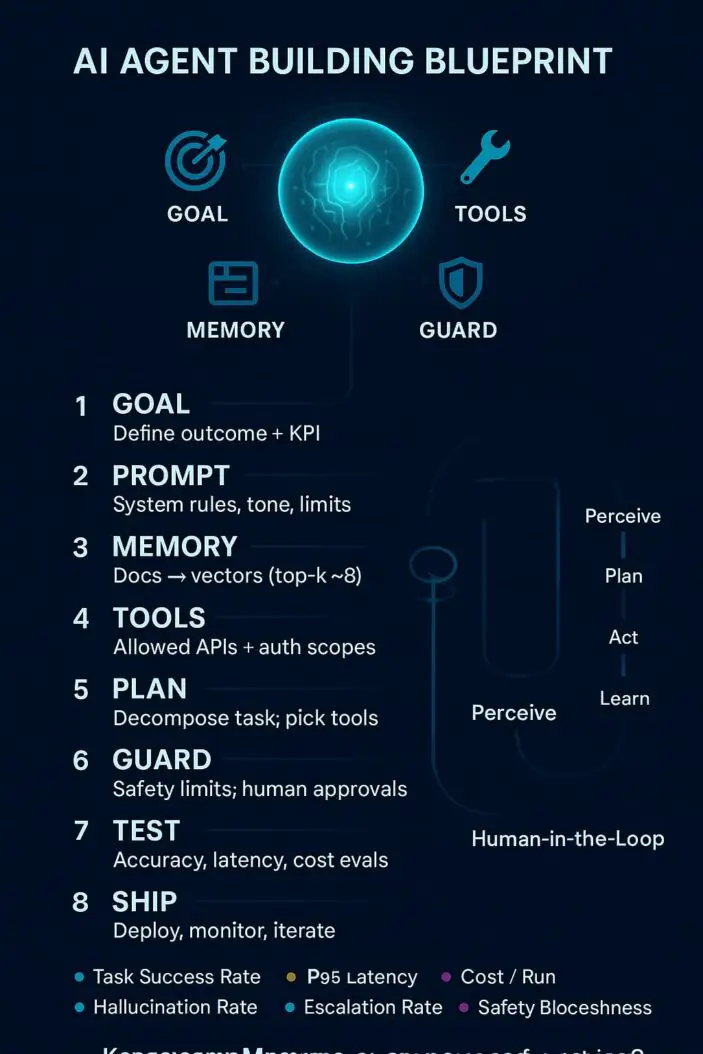

Core Components of an AI Agent

Every effective AI agent needs these essential components:

1. Language Model Interface

This connects your agent to ChatGPT’s API. It handles sending messages and receiving responses.

2. Memory System

Stores conversation history and learned information. Without memory, your agent forgets everything after each interaction.

3. Tool Integration

Allows your agent to perform actions like searching the web, sending emails, or accessing databases.

4. Decision Engine

The logic that determines what the agent should do next based on user input and context.

5. Response Generator

Formats and delivers responses in a natural, helpful way.

Building Your First AI Agent: Step-by-Step Guide

Step 1: Create the Basic Agent Class

Start with this foundation code in agent.py:

import openai

import os

from dotenv import load_dotenv

import json

from datetime import datetime

load_dotenv()

class AIAgent:

def __init__(self):

self.api_key = os.getenv('OPENAI_API_KEY')

self.model = os.getenv('MODEL_NAME', 'gpt-5')

self.conversation_history = []

self.tools = {}

# Initialize OpenAI client

openai.api_key = self.api_key

def add_message(self, role, content):

"""Add a message to conversation history"""

self.conversation_history.append({

"role": role,

"content": content,

"timestamp": datetime.now().isoformat()

})

def get_response(self, user_input):

"""Get response from ChatGPT"""

self.add_message("user", user_input)

try:

response = openai.ChatCompletion.create(

model=self.model,

messages=self.conversation_history,

max_tokens=1000,

temperature=0.7

)

assistant_response = response.choices[0].message.content

self.add_message("assistant", assistant_response)

return assistant_response

except Exception as e:

return f"Error: {str(e)}"

Step 2: Add Memory and Context Management

Enhance your agent with better memory handling:

def manage_context(self, max_messages=20):

"""Keep conversation history manageable"""

if len(self.conversation_history) > max_messages:

# Keep system message and recent messages

system_messages = [msg for msg in self.conversation_history if msg.get('role') == 'system']

recent_messages = self.conversation_history[-max_messages:]

self.conversation_history = system_messages + recent_messages

def set_system_prompt(self, prompt):

"""Set the agent's personality and capabilities"""

system_message = {

"role": "system",

"content": prompt,

"timestamp": datetime.now().isoformat()

}

self.conversation_history.insert(0, system_message)

Step 3: Implement Tool Integration

Add the ability to use external tools:

def register_tool(self, name, function, description):

"""Register a tool that the agent can use"""

self.tools[name] = {

"function": function,

"description": description

}

def use_tool(self, tool_name, *args, **kwargs):

"""Execute a registered tool"""

if tool_name in self.tools:

try:

return self.tools[tool_name]["function"](*args, **kwargs)

except Exception as e:

return f"Tool error: {str(e)}"

else:

return f"Tool '{tool_name}' not found"

Step 4: Create Example Tools

Add practical tools your agent can use:

# tools/web_search.py

import requests

import json

def search_web(query, num_results=3):

"""Simple web search function"""

# This is a simplified example - use a real search API

try:

# Using DuckDuckGo's instant answer API (free)

url = f"https://api.duckduckgo.com/?q={query}&format=json"

response = requests.get(url)

data = response.json()

if data.get('Abstract'):

return data['Abstract']

else:

return f"No clear answer found for: {query}"

except Exception as e:

return f"Search failed: {str(e)}"

# tools/file_operations.py

def save_to_file(filename, content):

"""Save content to a file"""

try:

with open(f"data/{filename}", 'w') as f:

f.write(content)

return f"Saved to {filename}"

except Exception as e:

return f"Failed to save: {str(e)}"

def read_from_file(filename):

"""Read content from a file"""

try:

with open(f"data/{filename}", 'r') as f:

return f.read()

except Exception as e:

return f"Failed to read: {str(e)}"

Step 5: Build the Decision Engine

Create logic to determine when and how to use tools:

def process_request(self, user_input):

"""Process user request and decide on actions"""

# Check if user is asking for web search

search_keywords = ['search', 'find', 'look up', 'what is', 'who is']

if any(keyword in user_input.lower() for keyword in search_keywords):

# Extract search query

query = user_input.lower()

for keyword in search_keywords:

query = query.replace(keyword, '').strip()

search_result = self.use_tool('web_search', query)

return f"I found this information: {search_result}"

# Check if user wants to save something

if 'save' in user_input.lower() and 'file' in user_input.lower():

return "What would you like me to save and what filename should I use?"

# Default to normal conversation

return self.get_response(user_input)

Step 6: Create the Main Application

Put it all together in main.py:

from agent import AIAgent

from tools.web_search import search_web

from tools.file_operations import save_to_file, read_from_file

def main():

# Initialize the agent

agent = AIAgent()

# Set system prompt

system_prompt = """

You are a helpful AI assistant with access to web search and file operations.

You can search for information online and save/read files when requested.

Always be helpful, accurate, and clear in your responses.

"""

agent.set_system_prompt(system_prompt)

# Register tools

agent.register_tool('web_search', search_web, 'Search the web for information')

agent.register_tool('save_file', save_to_file, 'Save content to a file')

agent.register_tool('read_file', read_from_file, 'Read content from a file')

print("AI Agent is ready! Type 'quit' to exit.")

while True:

user_input = input("\nYou: ")

if user_input.lower() == 'quit':

break

response = agent.process_request(user_input)

print(f"Agent: {response}")

if __name__ == "__main__":

main()

Advanced Features and Capabilities

Adding Long-term Memory

Create a persistent memory system:

import sqlite3

import json

class PersistentMemory:

def __init__(self, db_path="agent_memory.db"):

self.conn = sqlite3.connect(db_path)

self.setup_database()

def setup_database(self):

"""Create memory tables"""

cursor = self.conn.cursor()

cursor.execute("""

CREATE TABLE IF NOT EXISTS conversations (

id INTEGER PRIMARY KEY,

user_id TEXT,

message TEXT,

response TEXT,

timestamp DATETIME DEFAULT CURRENT_TIMESTAMP

)

""")

self.conn.commit()

def save_interaction(self, user_id, message, response):

"""Save conversation to database"""

cursor = self.conn.cursor()

cursor.execute(

"INSERT INTO conversations (user_id, message, response) VALUES (?, ?, ?)",

(user_id, message, response)

)

self.conn.commit()

def get_user_history(self, user_id, limit=10):

"""Get recent conversations for a user"""

cursor = self.conn.cursor()

cursor.execute(

"SELECT message, response FROM conversations WHERE user_id = ? ORDER BY timestamp DESC LIMIT ?",

(user_id, limit)

)

return cursor.fetchall()

Multi-Modal Capabilities

Add image and document processing:

import base64

from PIL import Image

import io

def process_image(image_path):

"""Process and describe an image"""

try:

with open(image_path, 'rb') as f:

image_data = base64.b64encode(f.read()).decode()

response = openai.ChatCompletion.create(

model="gpt-4-vision-preview",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "What's in this image?"},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{image_data}"

}

}

]

}

],

max_tokens=300

)

return response.choices[0].message.content

except Exception as e:

return f"Error processing image: {str(e)}"

API Integration

Connect to external services:

import requests

def get_weather(city):

"""Get weather information"""

api_key = os.getenv('WEATHER_API_KEY')

url = f"http://api.openweathermap.org/data/2.5/weather?q={city}&appid={api_key}&units=metric"

try:

response = requests.get(url)

data = response.json()

if response.status_code == 200:

temp = data['main']['temp']

description = data['weather'][0]['description']

return f"Weather in {city}: {temp}°C, {description}"

else:

return f"Couldn't get weather for {city}"

except Exception as e:

return f"Weather service error: {str(e)}"

def send_email(to_address, subject, body):

"""Send email using email service API"""

# Implement using your preferred email service

# This is a placeholder for the actual implementation

return f"Email sent to {to_address} with subject: {subject}"

Testing and Debugging Your AI Agent

Unit Testing

Create tests for your agent components:

# test_agent.py

import unittest

from agent import AIAgent

class TestAIAgent(unittest.TestCase):

def setUp(self):

self.agent = AIAgent()

self.agent.set_system_prompt("You are a helpful assistant.")

def test_add_message(self):

self.agent.add_message("user", "Hello")

self.assertEqual(len(self.agent.conversation_history), 2) # system + user

def test_tool_registration(self):

def dummy_tool():

return "test"

self.agent.register_tool("test_tool", dummy_tool, "A test tool")

self.assertIn("test_tool", self.agent.tools)

def test_tool_execution(self):

def dummy_tool(x):

return x * 2

self.agent.register_tool("multiply", dummy_tool, "Multiply by 2")

result = self.agent.use_tool("multiply", 5)

self.assertEqual(result, 10)

if __name__ == '__main__':

unittest.main()

Debugging Common Issues

| Problem | Cause | Solution |

|---|---|---|

| API timeouts | Large requests or slow connection | Reduce max_tokens, add retry logic |

| Context too long | Too many messages in history | Implement context management |

| Tool errors | Missing dependencies or wrong parameters | Add error handling and validation |

| Memory issues | Large conversation histories | Implement conversation pruning |

| Rate limits | Too many API calls | Add rate limiting and caching |

Performance Monitoring

Track your agent’s performance:

import time

import logging

class PerformanceMonitor:

def __init__(self):

self.metrics = {

'response_times': [],

'api_calls': 0,

'errors': 0,

'successful_interactions': 0

}

def log_interaction(self, start_time, success=True, error=None):

duration = time.time() - start_time

self.metrics['response_times'].append(duration)

self.metrics['api_calls'] += 1

if success:

self.metrics['successful_interactions'] += 1

else:

self.metrics['errors'] += 1

if error:

logging.error(f"Agent error: {error}")

def get_stats(self):

if self.metrics['response_times']:

avg_response_time = sum(self.metrics['response_times']) / len(self.metrics['response_times'])

success_rate = (self.metrics['successful_interactions'] / self.metrics['api_calls']) * 100

return {

'average_response_time': avg_response_time,

'success_rate': success_rate,

'total_interactions': self.metrics['api_calls']

}

return {}

Deployment Options

Local Development Server

For testing and development:

from flask import Flask, request, jsonify

from agent import AIAgent

app = Flask(__name__)

agent = AIAgent()

@app.route('/chat', methods=['POST'])

def chat():

user_message = request.json.get('message')

response = agent.process_request(user_message)

return jsonify({'response': response})

if __name__ == '__main__':

app.run(debug=True, host='0.0.0.0', port=5000)

Cloud Deployment

Option 1: Heroku

- Create a

Procfile:

web: python main.py

- Create

requirements.txt:

openai

python-dotenv

requests

flask

gunicorn

- Deploy:

git init

git add .

git commit -m "Initial commit"

heroku create your-agent-name

git push heroku main

Option 2: AWS Lambda

Create a serverless function:

import json

from agent import AIAgent

agent = AIAgent()

def lambda_handler(event, context):

user_message = json.loads(event['body'])['message']

response = agent.process_request(user_message)

return {

'statusCode': 200,

'body': json.dumps({'response': response})

}

Option 3: Docker Container

Create a Dockerfile:

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

EXPOSE 5000

CMD ["python", "main.py"]

Build and run:

docker build -t ai-agent .

docker run -p 5000:5000 ai-agent

Real-World Use Cases and Examples

Customer Support Agent

def create_support_agent():

agent = AIAgent()

system_prompt = """

You are a customer support agent for TechCorp.

You can help with:

- Order status and tracking

- Product information

- Technical troubleshooting

- Return and refund requests

Always be polite, helpful, and solution-focused.

If you can't solve an issue, escalate to human support.

"""

agent.set_system_prompt(system_prompt)

# Add tools for order lookup, knowledge base search, etc.

agent.register_tool('lookup_order', lookup_order_status, 'Check order status')

agent.register_tool('search_kb', search_knowledge_base, 'Search help articles')

return agent

Personal Assistant Agent

def create_personal_assistant():

agent = AIAgent()

system_prompt = """

You are a personal assistant that helps with daily tasks.

You can:

- Schedule appointments

- Set reminders

- Search for information

- Manage to-do lists

- Send messages

Be proactive and helpful. Ask clarifying questions when needed.

"""

agent.set_system_prompt(system_prompt)

# Calendar and task management tools

agent.register_tool('add_calendar_event', add_calendar_event, 'Add calendar events')

agent.register_tool('create_reminder', create_reminder, 'Set reminders')

agent.register_tool('manage_todos', manage_todo_list, 'Manage task lists')

return agent

Educational Tutor Agent

def create_tutor_agent(subject):

agent = AIAgent()

system_prompt = f"""

You are an expert tutor for {subject}.

Your teaching style:

- Break down complex concepts into simple parts

- Use examples and analogies

- Ask questions to check understanding

- Provide practice problems

- Encourage and motivate students

Adapt your explanations to the student's level.

"""

agent.set_system_prompt(system_prompt)

# Educational tools

agent.register_tool('generate_practice', generate_practice_problems, 'Create practice problems')

agent.register_tool('check_work', check_student_work, 'Review student answers')

agent.register_tool('find_resources', find_learning_resources, 'Find additional materials')

return agent

Security and Best Practices

API Key Security

Never expose your API keys:

# Good - using environment variables

api_key = os.getenv('OPENAI_API_KEY')

# Bad - hardcoding keys

api_key = "sk-your-key-here" # Don't do this!

Input Validation

Always validate user input:

def validate_input(user_input):

"""Validate and sanitize user input"""

if not user_input or len(user_input) > 1000:

return False, "Input too long or empty"

# Check for potential injection attempts

dangerous_patterns = ['<script>', 'javascript:', 'eval(']

if any(pattern in user_input.lower() for pattern in dangerous_patterns):

return False, "Invalid input detected"

return True, "Valid"

Rate Limiting

Prevent abuse with rate limiting:

from collections import defaultdict

import time

class RateLimiter:

def __init__(self, max_requests=10, time_window=60):

self.max_requests = max_requests

self.time_window = time_window

self.requests = defaultdict(list)

def is_allowed(self, user_id):

now = time.time()

user_requests = self.requests[user_id]

# Remove old requests

user_requests = [req_time for req_time in user_requests if now - req_time < self.time_window]

self.requests[user_id] = user_requests

# Check if under limit

if len(user_requests) < self.max_requests:

user_requests.append(now)

return True

return False

Data Privacy

Protect user data:

def sanitize_logs(log_data):

"""Remove sensitive information from logs"""

sensitive_patterns = [

r'\b\d{4}[-\s]?\d{4}[-\s]?\d{4}[-\s]?\d{4}\b', # Credit cards

r'\b\d{3}-\d{2}-\d{4}\b', # SSN

r'\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b' # Emails

]

sanitized = log_data

for pattern in sensitive_patterns:

sanitized = re.sub(pattern, '[REDACTED]', sanitized)

return sanitized

Performance Optimization

Caching Responses

Implement response caching:

import hashlib

import json

from functools import lru_cache

class ResponseCache:

def __init__(self, max_size=1000):

self.cache = {}

self.max_size = max_size

def get_cache_key(self, messages):

"""Generate cache key from conversation context"""

content = json.dumps(messages, sort_keys=True)

return hashlib.md5(content.encode()).hexdigest()

def get(self, messages):

"""Get cached response"""

key = self.get_cache_key(messages)

return self.cache.get(key)

def set(self, messages, response):

"""Cache response"""

if len(self.cache) >= self.max_size:

# Remove oldest entry

oldest_key = next(iter(self.cache))

del self.cache[oldest_key]

key = self.get_cache_key(messages)

self.cache[key] = response

Async Processing

Handle multiple requests efficiently:

import asyncio

import openai

class AsyncAIAgent:

def __init__(self):

self.client = openai.AsyncOpenAI()

async def get_response_async(self, messages):

"""Get response asynchronously"""

try:

response = await self.client.chat.completions.create(

model="gpt-4",

messages=messages,

max_tokens=1000

)

return response.choices[0].message.content

except Exception as e:

return f"Error: {str(e)}"

async def process_multiple_requests(self, request_list):

"""Process multiple requests concurrently"""

tasks = [self.get_response_async(req) for req in request_list]

return await asyncio.gather(*tasks)

Monitoring and Analytics

Track your agent’s performance:

class AgentAnalytics:

def __init__(self):

self.metrics = {

'total_interactions': 0,

'successful_responses': 0,

'errors': 0,

'average_response_time': 0,

'popular_requests': defaultdict(int),

'user_satisfaction': []

}

def log_interaction(self, request_type, response_time, success=True, satisfaction=None):

"""Log interaction metrics"""

self.metrics['total_interactions'] += 1

self.metrics['popular_requests'][request_type] += 1

if success:

self.metrics['successful_responses'] += 1

else:

self.metrics['errors'] += 1

# Update average response time

current_avg = self.metrics['average_response_time']

total = self.metrics['total_interactions']

self.metrics['average_response_time'] = (current_avg * (total - 1) + response_time) / total

if satisfaction:

self.metrics['user_satisfaction'].append(satisfaction)

def generate_report(self):

"""Generate analytics report"""

success_rate = (self.metrics['successful_responses'] / self.metrics['total_interactions']) * 100

avg_satisfaction = sum(self.metrics['user_satisfaction']) / len(self.metrics['user_satisfaction']) if self.metrics['user_satisfaction'] else 0

return {

'total_interactions': self.metrics['total_interactions'],

'success_rate': f"{success_rate:.2f}%",

'average_response_time': f"{self.metrics['average_response_time']:.2f}s",

'average_satisfaction': f"{avg_satisfaction:.2f}/5",

'most_popular_requests': dict(sorted(self.metrics['popular_requests'].items(), key=lambda x: x[1], reverse=True)[:5])

}

Troubleshooting Common Issues

API Connection Problems

def test_api_connection():

"""Test OpenAI API connectivity"""

try:

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Hello"}],

max_tokens=10

)

print("API connection successful")

return True

except openai.error.AuthenticationError:

print("Invalid API key")

return False

except openai.error.RateLimitError:

print("Rate limit exceeded")

return False

except Exception as e:

print(f"Connection error: {str(e)}")

return False

Memory Management

def optimize_memory_usage(agent):

"""Optimize agent memory usage"""

# Limit conversation history

if len(agent.conversation_history) > 50:

# Keep system message and last 40 messages

system_msg = [msg for msg in agent.conversation_history if msg.get('role') == 'system']

recent_msgs = agent.conversation_history[-40:]

agent.conversation_history = system_msg + recent_msgs

# Clear old cache entries

if hasattr(agent, 'cache') and len(agent.cache.cache) > 100:

agent.cache.cache.clear()

print("Memory optimized")

Scaling Your AI Agent

Horizontal Scaling

Deploy multiple agent instances:

from multiprocessing import Pool

import uuid

class AgentManager:

def __init__(self, num_agents=4):

self.num_agents = num_agents

self.agents = [AIAgent() for _ in range(num_agents)]

self.current_agent = 0

def get_next_agent(self):

"""Get next available agent (round-robin)"""

agent = self.agents[self.current_agent]

self.current_agent = (self.current_agent + 1) % self.num_agents

return agent

def process_request(self, user_input):

"""Process request with load balancing"""

agent = self.get_next_agent()

return agent.process_request(user_input)

Database Integration

Store conversations and user data:

from sqlalchemy import create_engine, Column, String, DateTime, Text, Integer

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import sessionmaker

Base = declarative_base()

class Conversation(Base):

__tablename__ = 'conversations'

id = Column(Integer, primary_key=True)

user_id = Column(String(50), index=True)

message = Column(Text)

response = Column(Text)

timestamp = Column(DateTime)

session_id = Column(String(100))

class DatabaseManager:

def __init__(self, db_url):

self.engine = create_engine(db_url)

Base.metadata.create_all(self.engine)

Session = sessionmaker(bind=self.engine)

self.session = Session()

def save_conversation(self, user_id, message, response, session_id):

"""Save conversation to database"""

conversation = Conversation(

user_id=user_id,

message=message,

response=response,

timestamp=datetime.now(),

session_id=session_id

)

self.session.add(conversation)

self.session.commit()

def get_user_history(self, user_id, limit=10):

"""Get recent conversations for user"""

conversations = self.session.query(Conversation)\

.filter(Conversation.user_id == user_id)\

.order_by(Conversation.timestamp.desc())\

.limit(limit)\

.all()

return conversations

Conclusion

Building an AI agent with ChatGPT opens up endless possibilities for automation and user interaction. You now have the complete framework to create intelligent agents that can:

- Handle natural language conversations

- Use external tools and APIs

- Remember context across interactions

- Learn and adapt over time

- Scale to handle multiple users

Key Takeaways

- Start Simple: Begin with basic conversation handling, then add features incrementally

- Focus on Tools: The real power comes from integrating external capabilities

- Test Thoroughly: Always validate your agent’s responses and handle edge cases

- Monitor Performance: Track metrics to improve your agent continuously

- Security First: Always protect API keys and validate user input

- Plan for Scale: Design your architecture to handle growth from day one

Next Steps

Ready to build your AI agent? Here’s your action plan:

- Set up your development environment – Install Python and required libraries

- Get your OpenAI API key – Sign up and generate credentials

- Build the basic agent – Start with the core conversation functionality

- Add one tool – Begin with something simple like web search

- Test extensively – Make sure everything works before adding complexity

- Deploy and iterate – Get your agent live and improve based on usage

The future of AI agents is incredibly promising. As language models become more powerful and tools become more sophisticated, the agents you build today will form the foundation for tomorrow’s intelligent automation.

Remember: the best AI agent is one that solves real problems for real people. Focus on creating value, and the technical complexity will follow naturally.

Frequently Asked Questions

How much does it cost to run an AI agent with ChatGPT?

The cost depends on usage, but it’s very affordable for most applications. ChatGPT API pricing is typically:

- GPT-3.5-turbo: $0.001 per 1,000 tokens (input) and $0.002 per 1,000 tokens (output)

- GPT-4: $0.03 per 1,000 tokens (input) and $0.06 per 1,000 tokens (output)

- GPT-5: A typical 500-token exchange on GPT-5 standard would cost under $0.01

Quick Comparison (Per 1,000 Tokens)

| Model | Input Cost | Output Cost |

|---|---|---|

| GPT-3.5-turbo | ~$0.001 | ~$0.002 |

| GPT-4 | ~$0.03 | ~$0.06 |

| GPT-5 (std) | ~$0.00125 | ~$0.01 |

| GPT-5 mini | ~$0.00025 | ~$0.002 |

| GPT-5 nano | ~$0.00005 | ~$0.0004 |

For context, 1,000 tokens is roughly 750 words. A typical conversation exchange might use 200-500 tokens, costing less than $0.03 with GPT-4. Most small to medium applications spend $10-100 per month.

Can I build an AI agent without programming experience?

While some programming knowledge helps, you can start with basic Python skills. The examples in this guide use simple, readable code. If you’re completely new to programming:

- Learn basic Python syntax (2-3 weeks of study)

- Understand APIs and JSON (1 week)

- Follow this guide step by step

- Start with the simplest examples and build up

Many successful AI agent builders started as beginners. The key is patience and practice.

What’s the difference between building with ChatGPT API vs other AI models?

ChatGPT (OpenAI) offers several advantages:

Pros:

- Excellent natural language understanding

- Well-documented API

- Reliable performance and uptime

- Strong reasoning capabilities

- Large context window

Alternatives to consider:

- Anthropic Claude: Strong safety focus, good for content creation

- Google Gemini: Multimodal capabilities, competitive pricing

- Local models: Full control, no API costs, privacy

Choose based on your specific needs: ChatGPT is excellent for most general-purpose agents.

How do I make my AI agent remember conversations across sessions?

Implement persistent storage using databases or file systems:

# Simple file-based memory

import json

import os

class PersistentMemory:

def __init__(self, user_id):

self.user_id = user_id

self.memory_file = f"memory/{user_id}.json"

def save_conversation(self, conversation):

os.makedirs("memory", exist_ok=True)

with open(self.memory_file, 'w') as f:

json.dump(conversation, f)

def load_conversation(self):

if os.path.exists(self.memory_file):

with open(self.memory_file, 'r') as f:

return json.load(f)

return []

For production applications, use databases like PostgreSQL or MongoDB for better performance and reliability.

How can I prevent my AI agent from giving wrong or harmful information?

Implement several safety measures:

Input Validation:

def validate_request(user_input):

banned_topics = ['illegal activities', 'harmful instructions']

if any(topic in user_input.lower() for topic in banned_topics):

return False, "I can't help with that topic."

return True, "Valid request"

Output Filtering:

def filter_response(response):

if 'disclaimer needed' in response.lower():

response += "\n\nNote: This information is for educational purposes only."

return response

System Prompts: Always include safety instructions in your system prompt:

You are a helpful assistant. Always:

- Provide accurate information based on your training

- Say "I don't know" when uncertain

- Refuse to help with illegal or harmful requests

- Include disclaimers for medical, legal, or financial advice

Human Oversight: For critical applications, implement human review for sensitive responses or flag uncertain answers for manual verification.

Regular Testing: Continuously test your agent with edge cases and adversarial inputs to identify potential issues before they affect users.

- What is One Challenge in Ensuring Fairness in Generative AI: The Hidden Bias Problem - August 15, 2025

- How Small Language Models Are the Future of Agentic AI - August 15, 2025

- What Are the Four Core Characteristics of an AI Agent? - August 15, 2025